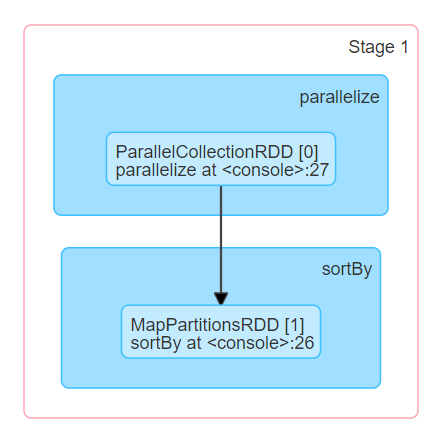

execute two programs in spark-shell:

first paragraph sortBy:

val list1: List[(String, Int)] = List(("the", 12), ("they", 2), ("do", 4), ("wild", 1), ("and", 5), ("into", 4))

val listRDD1: RDD[(String, Int)] = sc.parallelize(list1)

val result1: RDD[(String, Int)] = listRDD1.sortBy(_._2, false)

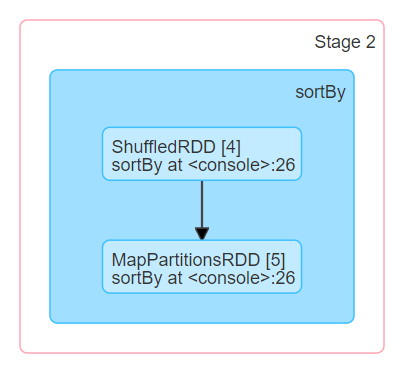

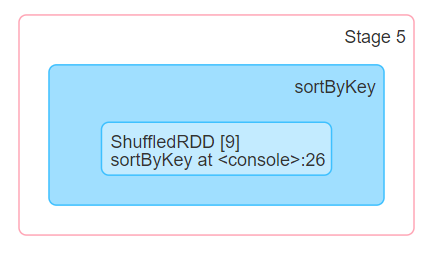

result1.collect()look at the DAG of the program in webui, resulting in three Stage:

4MapPartitionsRDD3ShuffledRDDshuffledRDD

sortBy:

keyByshuffleMapPartitionsRDD, valuesshuffledMapPartitionsRDDMapPartitionsRDDsortByKey

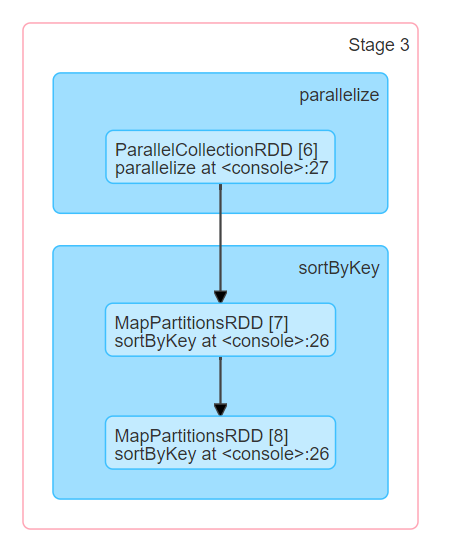

:

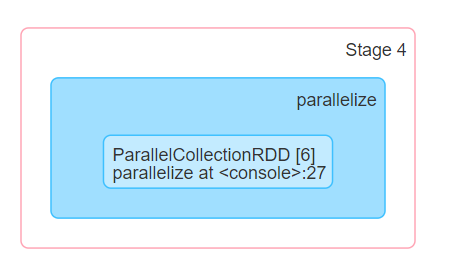

DAGStage:

look at the fact that DAG does generate two MapPartitionsRDD, but how are both MapPartitionsRDD generated? And why is there another parallelize phase in the middle? Ask the boss for an answer.