Hello prawns!

logstash:

100.97.73.229-- [19/Feb/2019:17:43:11 + 0800] "GET / news-spread_index-138.html HTTP/1.1" 7920 "-" Mozilla/5.0 (Linux; Android 8.1; MI 6X Build/OPM1.171019.011; wv) AppleWebKit/537.36 (KHTML, like Gecko) Version/4.0 Chrome/57.0.2987.132 MQQBrowser/6.2 TBS/044307 Mobile Safari/537.36 Imou "

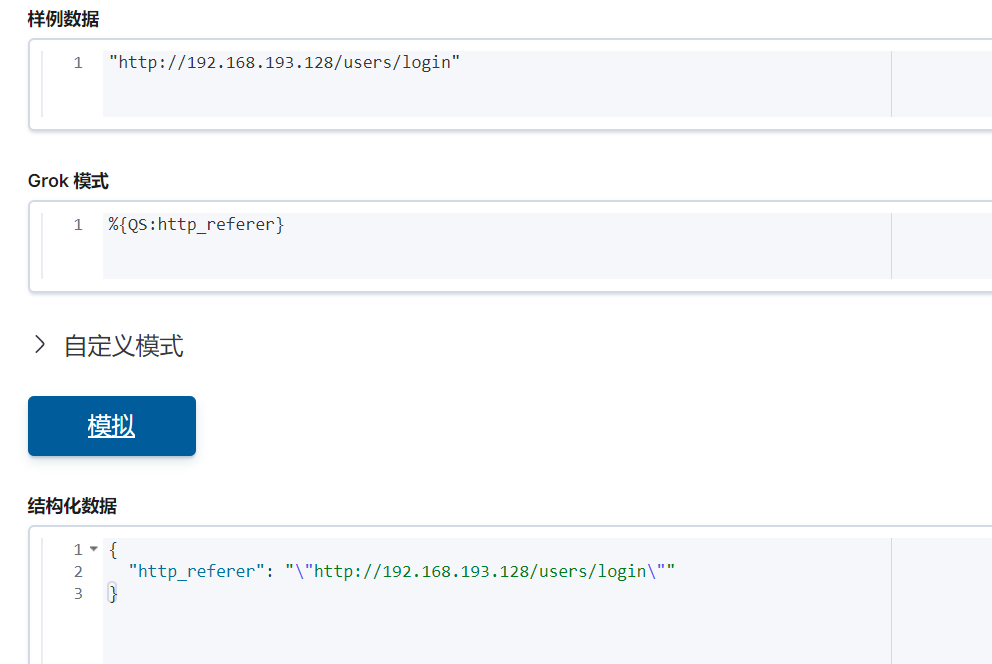

grok patterngrok:

% {IPORHOST:client_ip}% {USER:ident}% {USER:auth} [% {HTTPDATE:timestamp}] "(?:% {WORD:verb}% {NOTSPACE:request} (?: HTTP/% {NUMBER:http_version})? | -)"% {NUMBER:response} (?:% {NUMBER:bytes} | -)% {QUOTEDSTRING:domain}% {QUOTEDSTRING:data}

logstash:

input {

kafka{

bootstrap_servers=>"172.31.0.84:9092" -sharpkafkaip

topics=>["lcshop-log","lcshop-errorlog"] -sharptopic

decorate_events=>"true"

codec=>plain

}

}

filter {

if [@metadata][kafka][topic] == "lcshop-log" {

mutate {

add_field => {"[@metadata][index]" => "lcshop-log-%{+YYYY-MM}"}

}

grok {

match => { "message" => "%{IPORHOST:client_ip} %{USER:ident} %{USER:auth} \[%{HTTPDATE:timestamp}\] \"(?:%{WORD:verb} %{NOTSPACE:request}(?: HTTP/%{NUMBER:http_version})?|-)\" %{NUMBER:response} (?:%{NUMBER:bytes}|-) %{QUOTEDSTRING:domain} %{QUOTEDSTRING:data}"}

remove_field => ["message"]

-sharp

}

} else if [@metadata][kafka][topic] == "lcshop-errorlog" {

mutate {

add_field => {"[@metadata][index]" => "lcshop-errorlog-%{+YYYY-MM}"}

}

}

}

output {

elasticsearch {

hosts=>["172.31.0.76:9200"] -sharpesip

index=>"%{[@metadata][index]}" -sharptopicindex

}

}

but in the kibana view, index has been successfully generated, but the grok part has not taken effect, where is the problem?